Global Collaboration: Honda Research Institute – Ohio, SV, EU and Japan

Role: Design Researcher

Duration: 2025 – Present

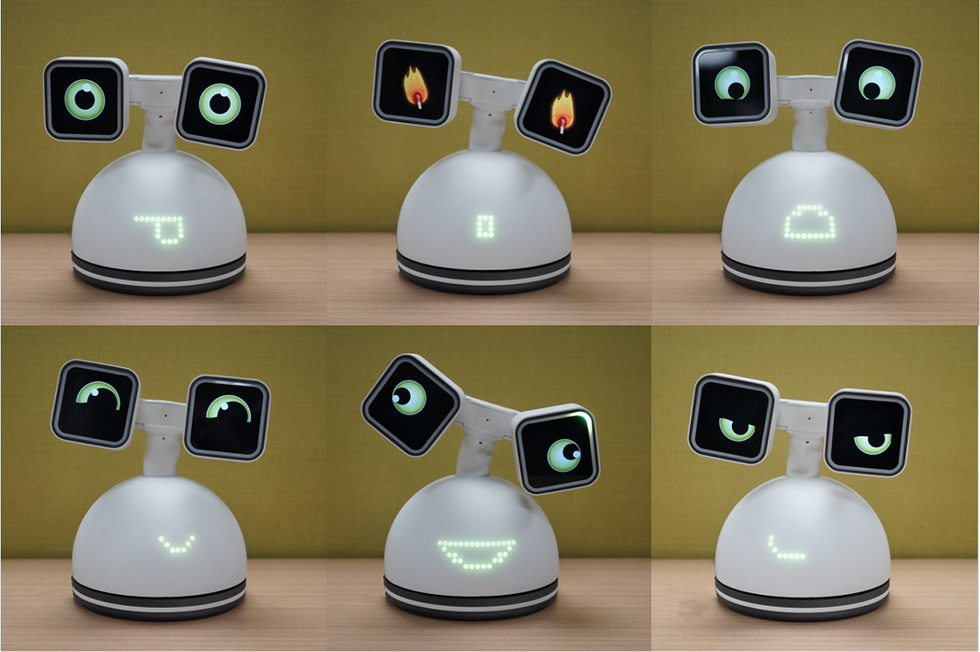

As part of the Pedagogical Futures team at Honda Research Institute (HRI), I’m contributing to the design and research of Haru, Honda’s social robot, exploring how embodied AI can nurture communication and empathy in children. The project investigates how social robots, integrated with LLMs, can support teachers and students in developing emotional intelligence, collaboration, and self-expression in real-world learning environments.

Context

Children’s communication skills have been deeply affected by increased screen-based learning and post-pandemic social anxiety.

Our challenge:

How might we design and evaluate human–robot interactions that strengthen children’s ability to express themselves, collaborate, and resolve conflict?

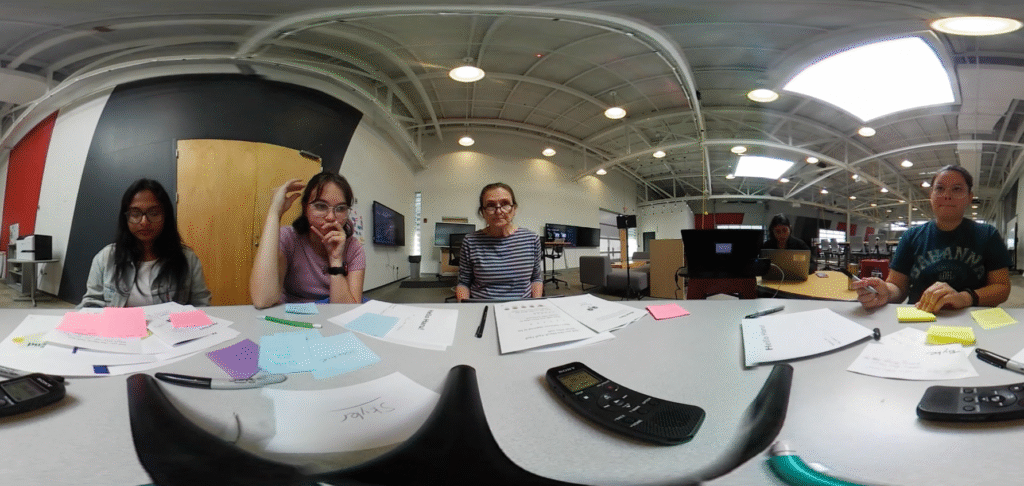

We worked with teachers and students to explore how Haru could act as a co-teacher, peer, and empathetic collaborator within elementary and middle school classrooms.

Approach

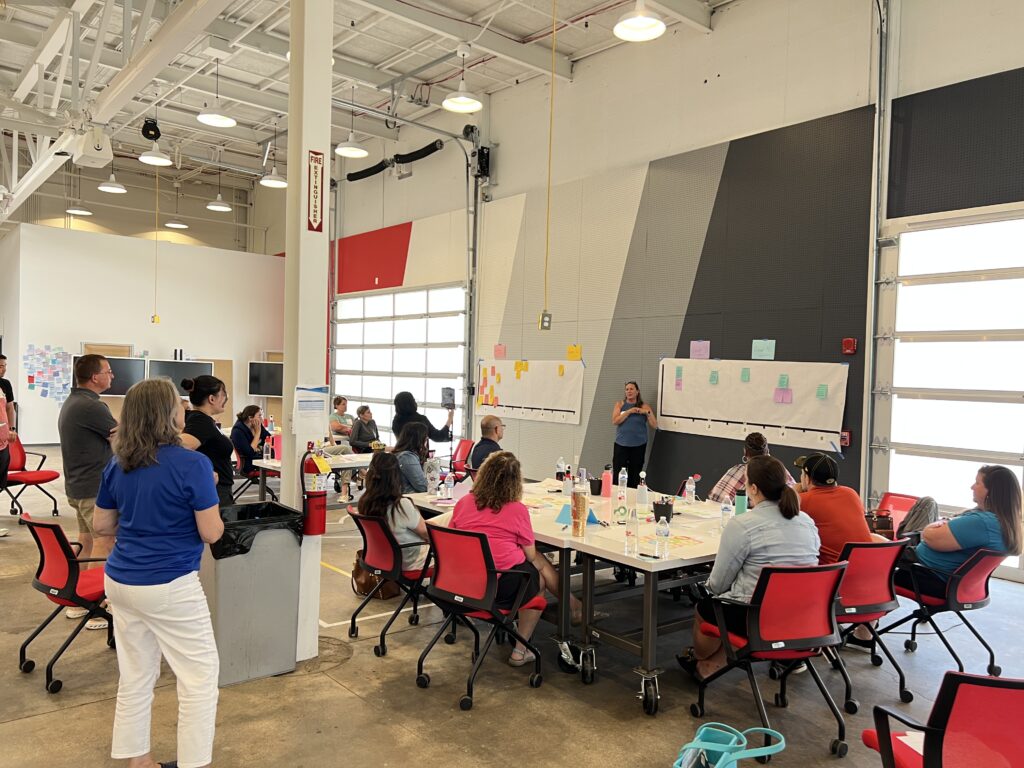

We adopted a research-through-co-design process that combined futures-oriented pedagogy with human-centered AI design.

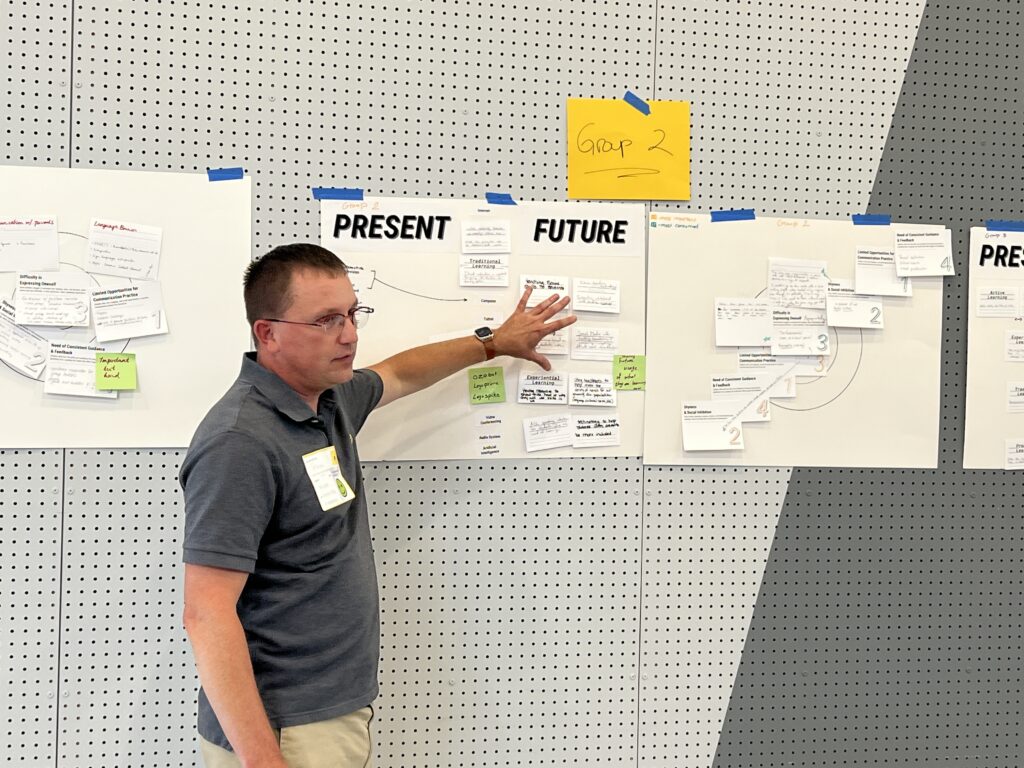

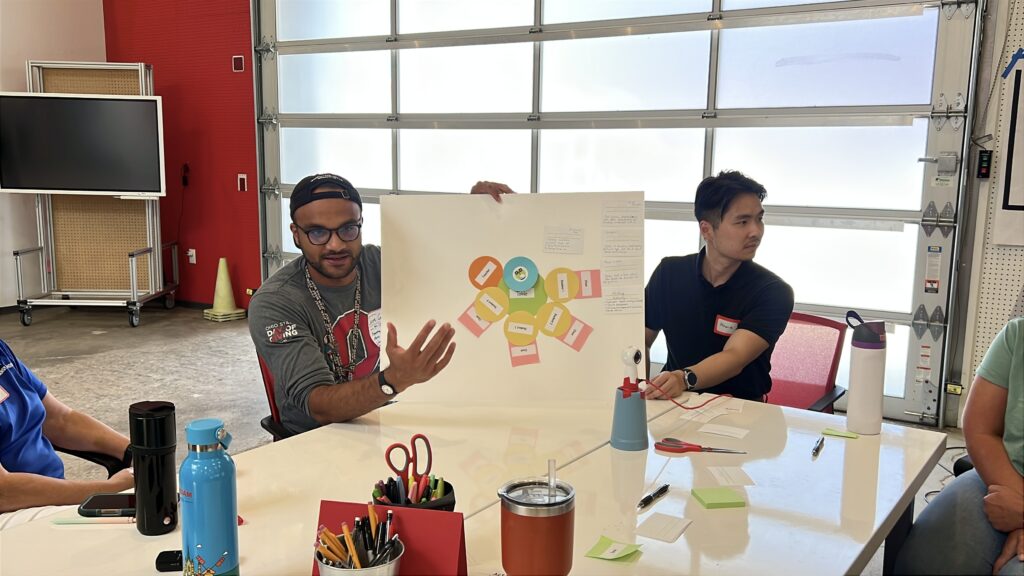

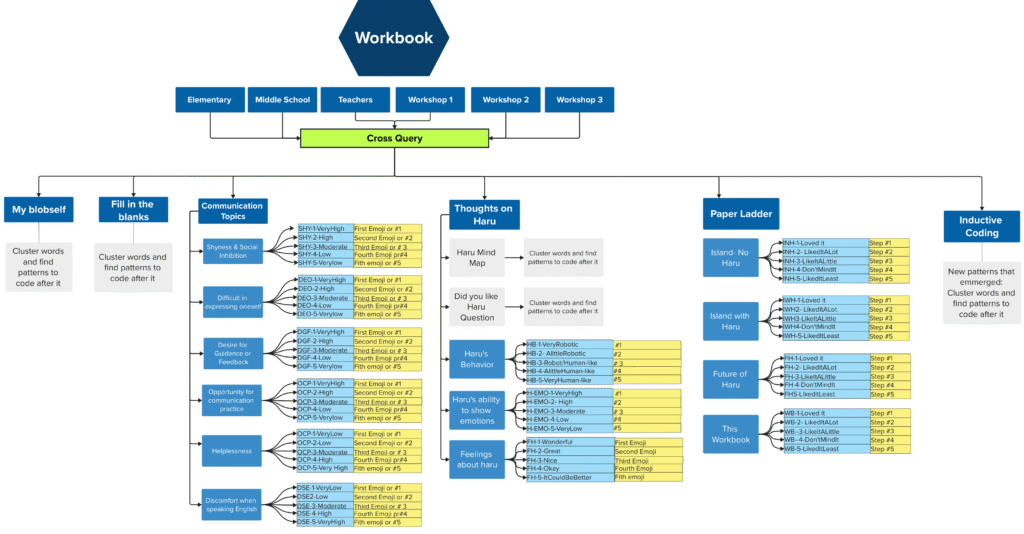

Teacher Workshops: Collaborated with 19 elementary and middle school teachers to co-design classroom activities with Haru. Through participatory mapping and scenario-building, we identified six major communication barriers, from shyness and language barriers to learned helplessness.

Pedagogical Fusion Framework: Synthesized findings into a new framework that blends traditional, active, experiential, and proactive learning models, shifting toward social constructionism, where students learn by co-designing with others, including AI. [information redacted]

Activity Design & Pilot Studies: Developed interactive sessions and workbooks for elementary and middle school teachers and students to test Haru’s role as a collaborator, peer, and facilitator. The activities promote empathy, conflict resolution, and self-expression.

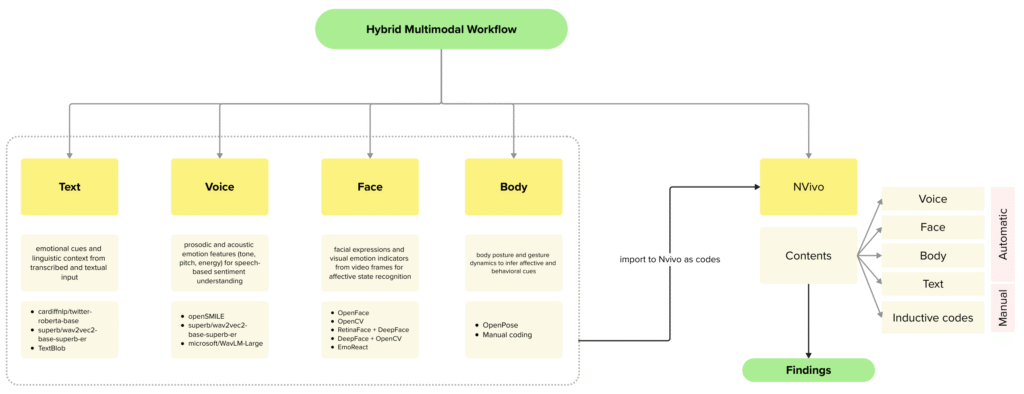

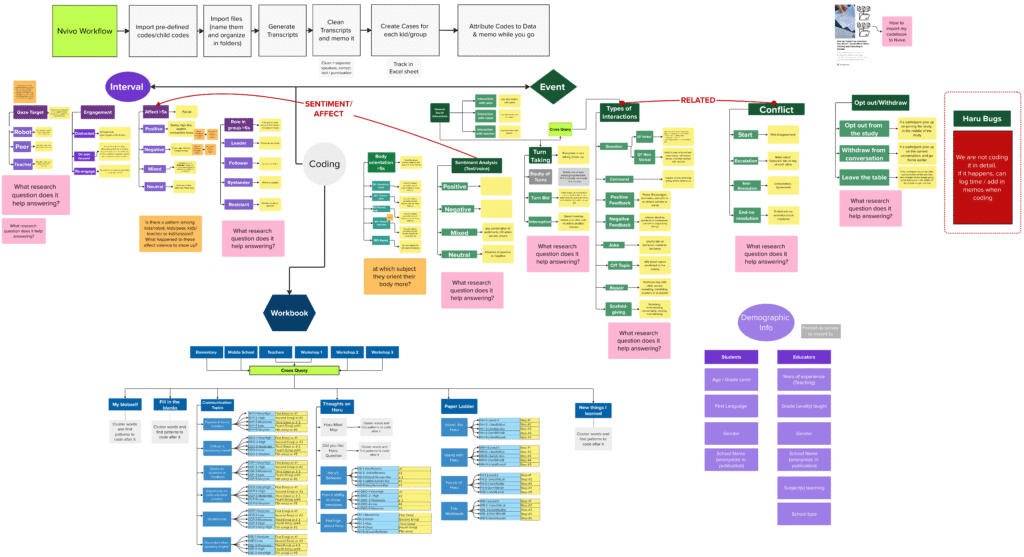

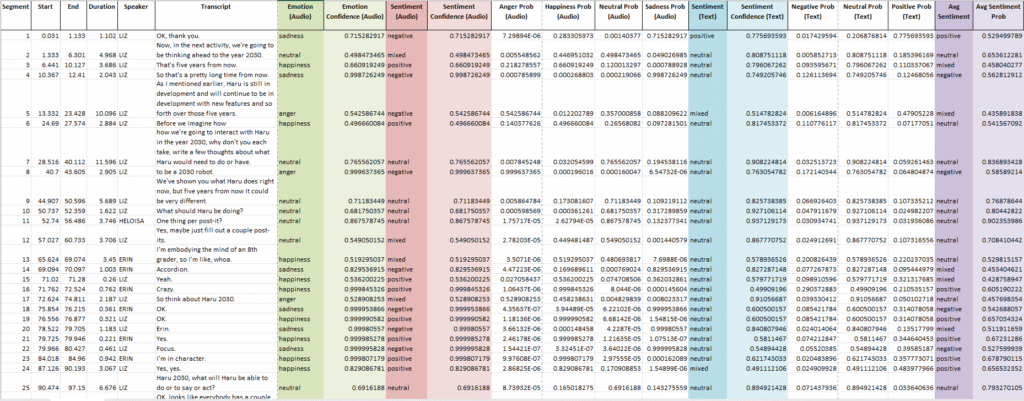

Hybrid Multimodal Analysis: Created a novel workflow combining qualitative coding (NVivo) with multimodal AI analytics (facial, voice, and text sentiment) to study emotional engagement and interaction dynamics.

Analysis Framework

Qualitative Layer: NVivo thematic coding of student and teacher reflections from session workbooks, focusing on communication style, participation, and emotional tone.

Computational Layer: AI-assisted sentiment analysis across voice, text, and facial cues to detect affective patterns.

Integration Layer: Combined both datasets through matrix visualization and temporal mapping to track spark moments, points of emotional resonance or disruption triggered by Haru’s actions.

Validation Layer: Compared machine learning predictions with human coders’ interpretations, exposing key insights on empathy, bias, and the limits of AI emotion recognition.

Impact & Next Steps

This project is informing the development of next-generation pedagogical systems at HRI that integrate embodied AI and social learning. The upcoming study phase involves six schools, testing Haru’s ability to act as a co-teacher and co-learner.

Our findings are shaping future publications on:

- Hybrid human–AI pedagogical design

- The Pedagogical Fusion Framework for adaptive learning

- Design methods for social robotics in education